Built atop the NVIDIA GB10 Grace Blackwell superchip, DGX Spark delivers up to one petaflop of AI performance and supports models of up to 200 billion parameters. The machine pairs 20 Arm cores with a Blackwell GPU, connected through NVLink-C2C to enable high memory bandwidth, and includes a 200 GbE ConnectX-7 interface for clustering. Its power draw is about 170 W, making it usable in a desktop setting.

Nvidia integrates its full AI software stack into the system, preinstalling CUDA libraries, frameworks such as PyTorch, and microservices via its NIM architecture. The design is intended to support workflows such as image-generation model tuning, vision agents and chatbots, allowing developers to build and iterate locally before deployment to cloud or server infrastructure.

Multiple original equipment manufacturers —including Acer, ASUS, Dell Technologies, GIGABYTE, HP, Lenovo and MSI—are producing their own versions of DGX Spark and will deliver units via Nvidia’s partner network. The system becomes orderable through Nvidia and partners as of 15 October.

Early adopters already include prominent AI organisations like Hugging Face, Meta, Google, Docker, and JetBrains, which are testing and adapting their tools for the DGX Spark environment. Research labs such as NYU Global Frontier Lab, which work on sensitive domains like healthcare, have cited the ability to prototype sophisticated models locally without relying on cloud compute as a key advantage.

The shift from data-centre-only AI compute to local, desktop supercomputing reflects an emerging trend toward decentralised AI workloads. As development demands increasingly push traditional workstations toward memory and software ceilings, the ability to access compact petaflop compute on the desk allows innovation at smaller scale and lower latency.

Nevertheless, the price remains a high barrier for many. At $3,999, DGX Spark targets institutions, deep tech startups and serious developers rather than casual users. Some users have noted that Nvidia has raised the price from earlier expectations by nearly one-third, which may limit adoption beyond elite users.

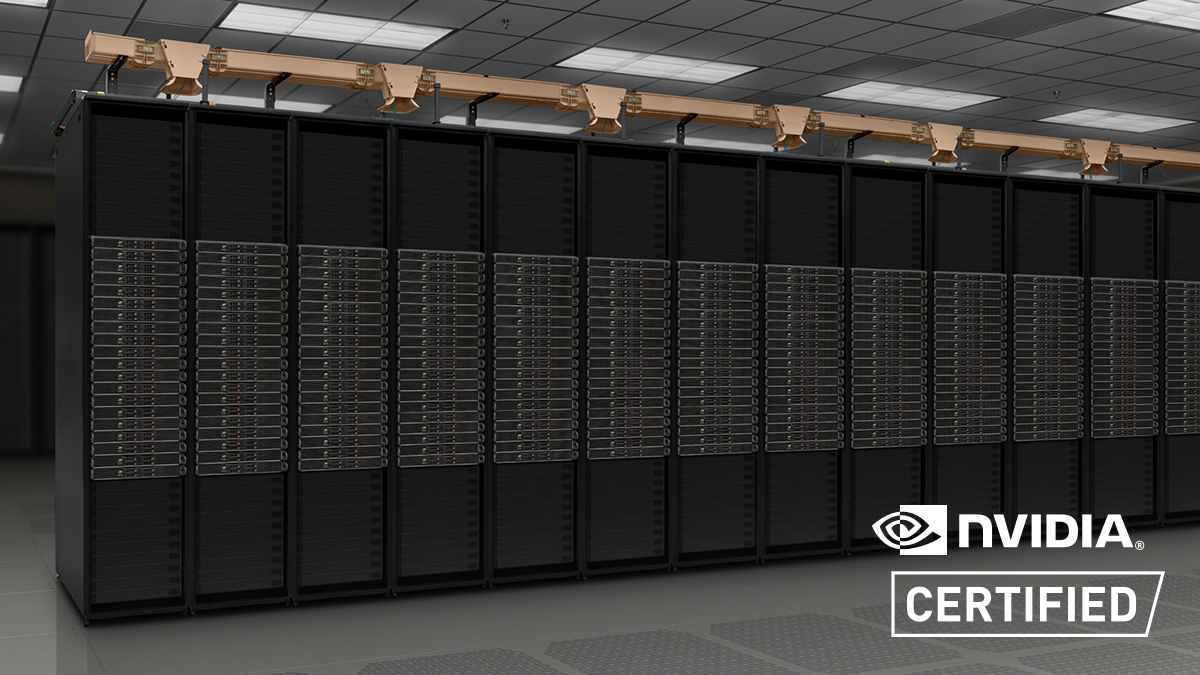

Technical reviewers observing the architecture have highlighted the device’s potential for clustering: users can link multiple DGX Sparks via high-speed networking to handle larger AI workloads. Benchmarks suggest the system supports scale-out configurations and could serve as a building block for modular, locally distributed AI clusters.

Critics caution that while DGX Spark brings impressive hardware density, developing large language models and agentic AI at scale still often requires vast compute—something that may still push users toward data centres or cloud resources. The balance between what can be done on a desktop versus what remains in large infrastructure will determine how broadly devices like DGX Spark change norms in AI development.

Topics

Technology